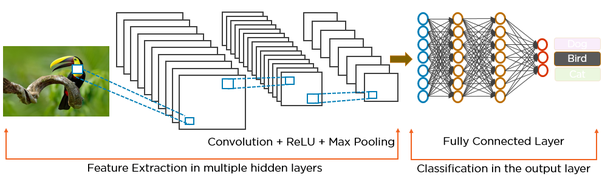

Convenlutional Neural Networks

- Overview

- Alternative View

- More detail about CNN

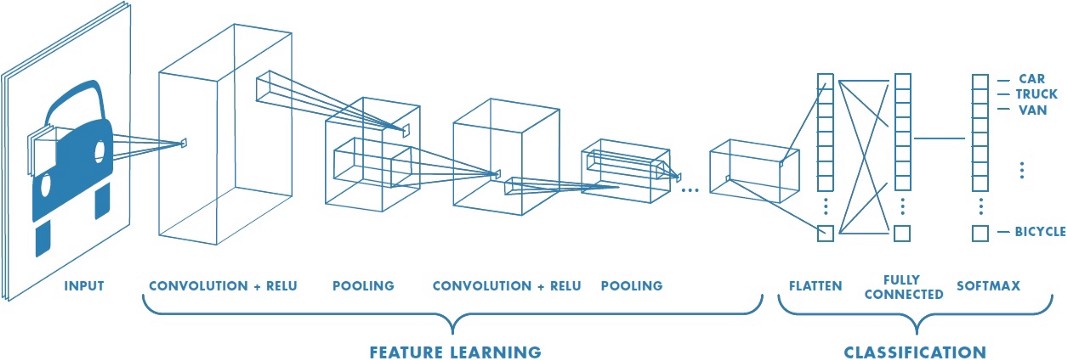

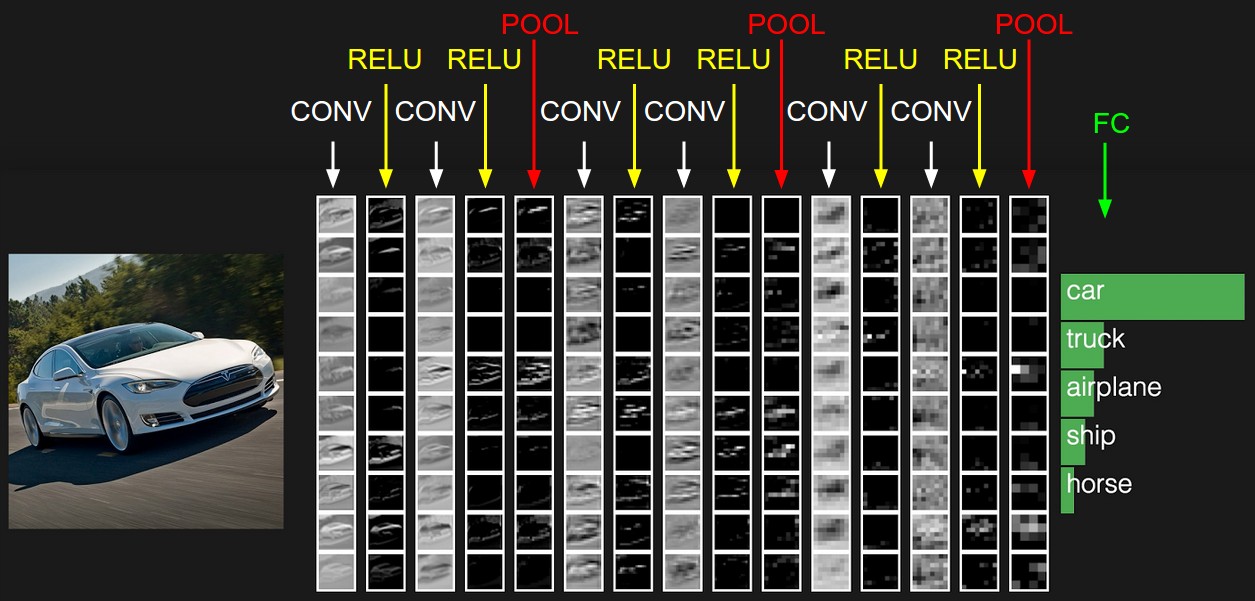

Basic Architecture

Multi convolution layers

Alternative Views

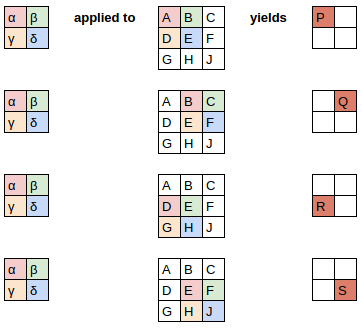

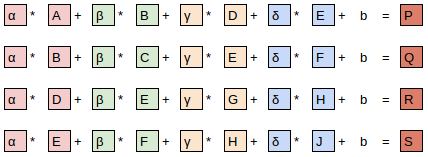

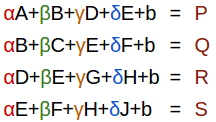

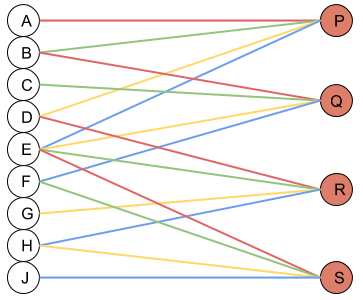

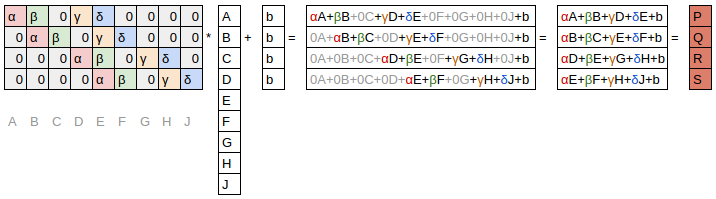

Convelution Layer

Stride and Padding

- Stride is the number of pixels shifts over the input matrix.

- Padding: Pad the picture or drop parts to fit.

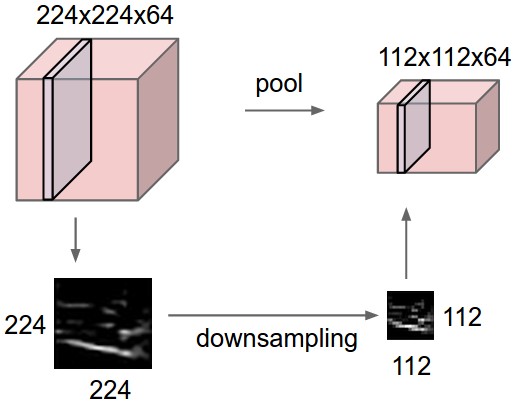

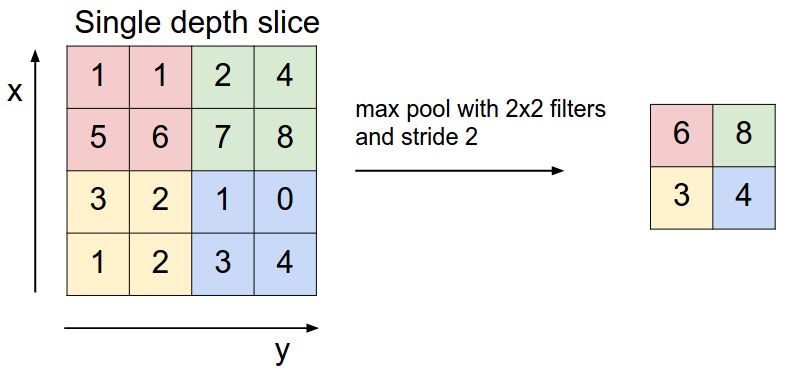

Pooling Layer

- Reduce the number of parameters

- Spatial pooling also called subsampling or downsampling which reduces the dimensionality of each map but retains the important information.

Max Pooling

Average Pooling

Sum Pooling

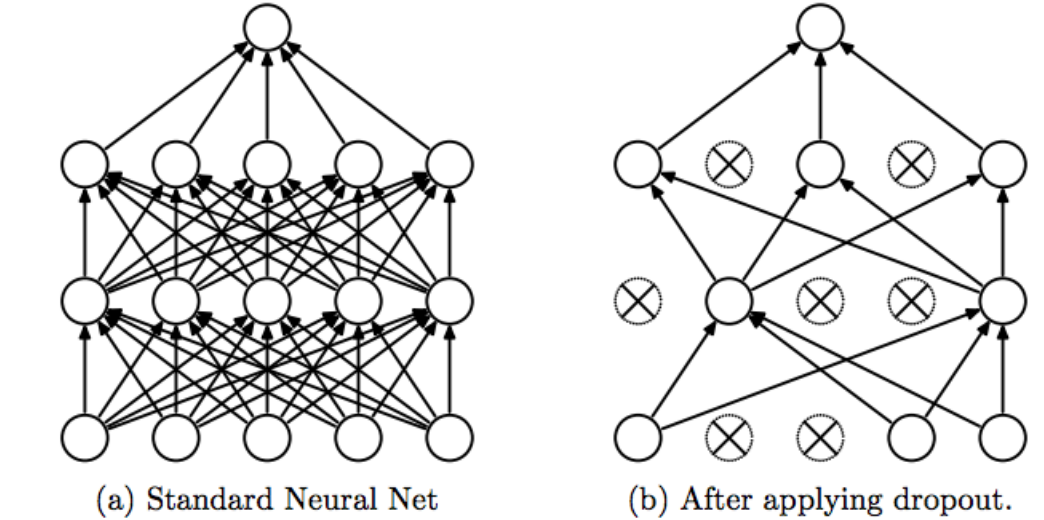

Regularization

- Dropout

- Data Argument

- Early Stopping

Dropout: A Simple Way to Prevent Neural Networks from Overfitting

- Ignoring units (i.e. neurons) with probability $1-p$ during the training phase of certain set of neurons which is chosen at random

- These units are not considered during a particular forward or backward pass

- Learn more robust features that are useful in conjunction with many different random subsets of the other neurons.

- Roughly doubles the number of iterations required to converge. However, training time for each epoch is less

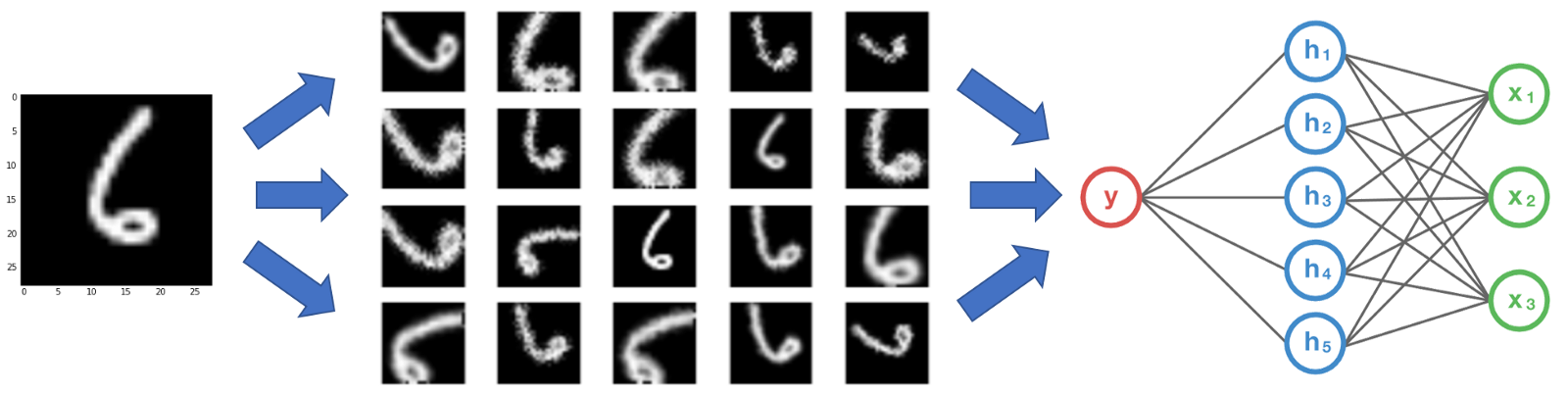

Data Argument

Early Stopping

Well Known ConvNets

- LeNet

- AlexNet

- ZF Net

- GoogleNet

- VGGNet

- ResNet

Application

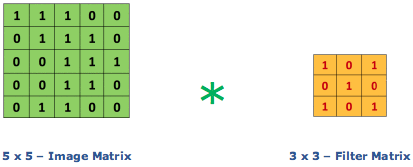

* More detail about CNN *

$G(m,n)=(f*h)[m,n]=\sum_{j}\sum_k h(j,k)f[m-j,n-k]$

Padding: $p = (f-1)/2$

Striding

$n_{out}=\lfloor\frac{n_{in}+2p-f}{s}+1\rfloor$

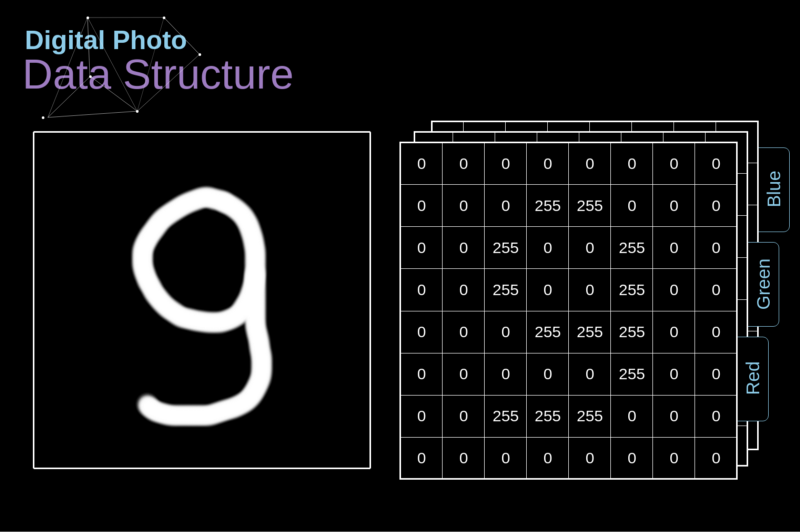

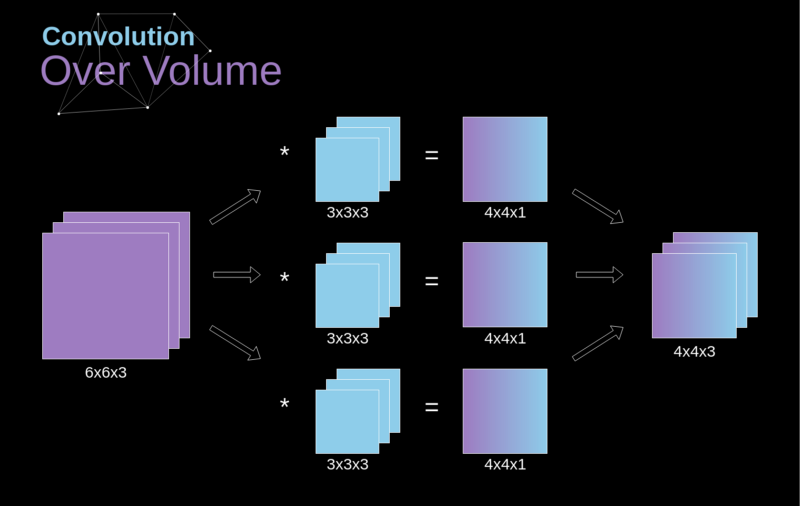

The third dimension

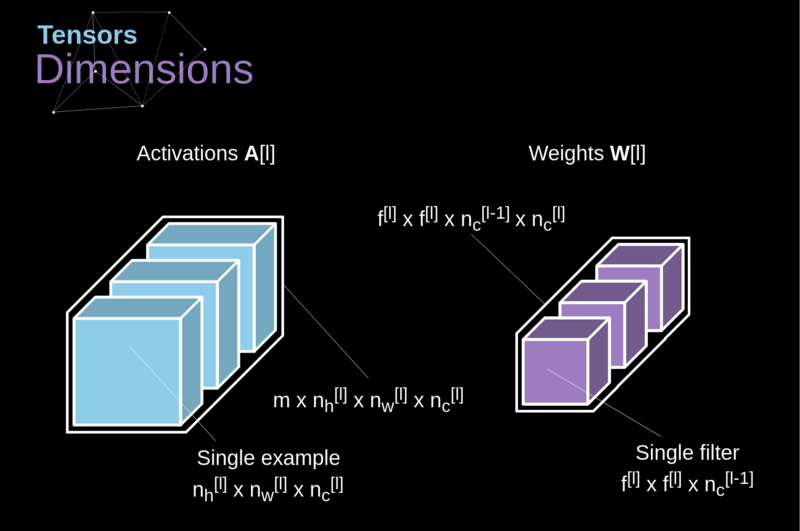

Tensors Dimensions

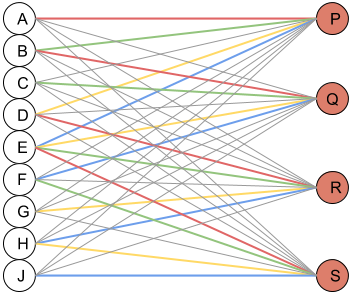

Connections Cutting and Parameters Sharing

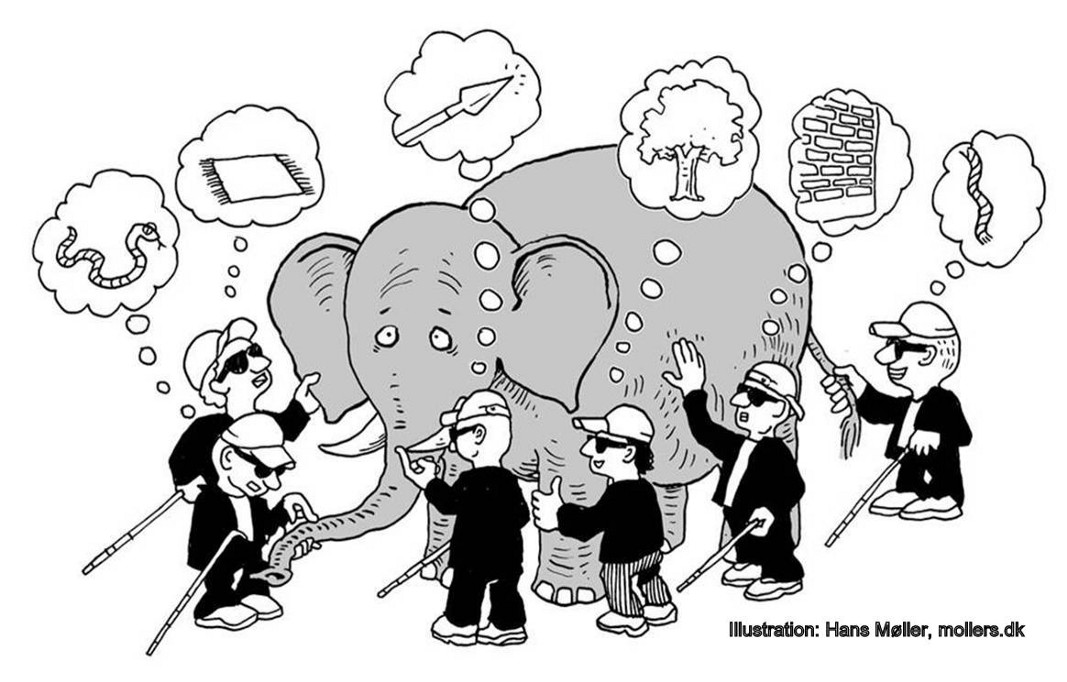

Two important features of CNN

- Connections Cutting

- Parameters Sharing

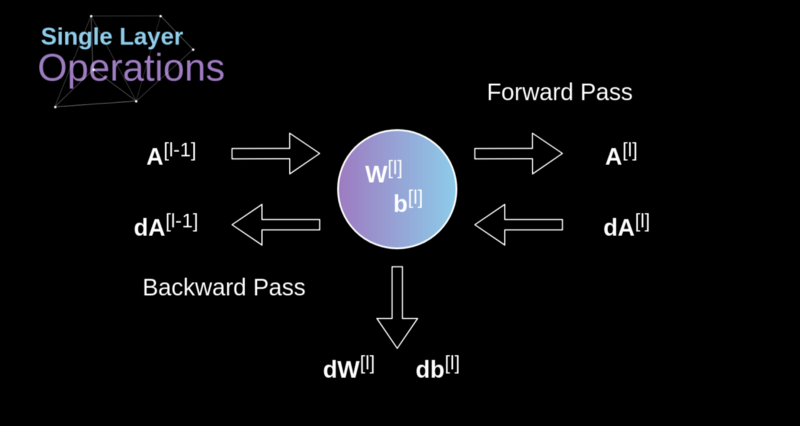

Convolutional Layer Backpropagation

$dZ^{[l]}=dA^{[l]}*g^{'}(Z^{[l]})$

$dA+=\sum_{m=0}^{n_h}\sum_{n=0}^{n_w}W\cdot dZ[m,n]$

Pooling Layer

Pooling Layers Backpropagation

Machine Learning

Applications and practices